I did not issue a code red

........... Sergey has been hanging out with our engineers for a while now. ....... And he’s a deep mathematician and a computer scientist. So to him, the underlying technology — I think if I were to use his words, he would say it’s the most exciting thing he has seen in his lifetime. So it’s all that excitement, and I’m glad. They’ve always said, call us whenever you need to, and I call them. ............. when many parts of the company are moving, you can create bottlenecks, and you can slow down. ......... AI is the most profound technology humanity will ever work on. I’ve always felt that for a while. I think it will get to the essence of what humanity is. ........ I remember talking to Elon eight years ago, and he was deeply concerned about AI safety then. And I think he has been consistently concerned. ............AI is too important an area not to regulate. It’s also too important an area not to regulate well.

........ I’ve never seen a technology in its earliest days with as much concern as AI. ........ To me at least there is no way to do this effectively without getting governments involved. .......... It is so clear to me that these systems are going to be very, very capable. And so it almost doesn’t matter whether you’ve reached AGI or not. You’re going to have systems which are capable of delivering benefits at a scale we have never seen before and potentially causing real harm. .......... There is a spectrum of possibilities. ......... They could really progress in a two-year time frame. And so we have to really make sure we are vigilant and working with it. ........... AI, like climate change, is it affects everyone. .......... No one company can get it right. We have been very clear about responsible AI — one of the first companies to put out AI principles. We issue progress reports.......... AI is too important an area not to regulate. It’s also too important an area not to regulate well. .......... if we have a foundational approach to privacy, that should apply to a technologies, too. ........ health care is a very regulated industry, right? And so when AI is going to come in, it has to conform with all regulations. .......... there’s a non-zero risk that this stuff does something really, really bad ......... it’s like asking, hey, why aren’t you moving fast and breaking things again? ....... I actually — I got a text from a software engineer a friend of mine the other day who was asking me if he should go into construction or welding because all of the software jobs are going to be taken by these large language models. ............ some of the grunt work you’re doing as part of programming is going to get better. So maybe it’ll be more fun to program over time — no different from the Google Docs make it easier to write. ........... programming is going to become more accessible to more people. .......... we are going to evolve to a more natural language way of programming over time .......... When Bard is at its best, it answers my questions without me having to visit another website. I know you’re cognizant of this. But man, if Bard gets as good as you want it to be, how does the web survive? .......... it turns out if you order your fries well done, which is not on the menu, they arrive much crispier and more delicious. .1/ Steve Jobs compared his computer to a bicycle for the mind, revolutionizing the way we use technology. https://t.co/domnIWYuiG #SteveJobs #computerrevolution #personalcomputers

— Paramendra Kumar Bhagat (@paramendra) March 31, 2023

4/ AI has been around for a long time, but ChatGPT is bringing it to the masses, just like Netscape did for the internet. https://t.co/domnIWYuiG #ChatGPT #motorbikeforthemind #technology #innovation #futuretech

— Paramendra Kumar Bhagat (@paramendra) March 31, 2023

7/ Is ChatGPT the motorbike for the mind, just like Steve Jobs' computer was the bicycle for the mind? https://t.co/domnIWYuiG #Afghanistan #weddingparties #ChatGPT

— Paramendra Kumar Bhagat (@paramendra) March 31, 2023

Add extra dose of health and taste to your diet with Patanjali Honey! It is rich in minerals, vitamins and other nutritious elements which can be a perfectly healthy choice for those trying to lose weight. #PatanjaliProducts #Honey pic.twitter.com/qpGolFBYMc

— Patanjali Ayurved (@PypAyurved) April 1, 2023

आज पतंजलि योगपीठ हरिद्वार में आदरणीय केंद्रीय गृहमंत्री श्री @AmitShah जी, मा० मुख्यमंत्री श्री @pushkardhami जी ,श्रद्धेय @yogrishiramdev जी एवं श्रद्धेय @Ach_Balkrishna जी के सानिध्य में पतंजलि विश्वविद्यालय का उद्घाटन समारोह व संन्यास दीक्षा महोत्सव कार्यक्रम में सम्मिलित हुआ। pic.twitter.com/v4sYb6nkXe

— प्रदीप बत्रा (@ThePradeepBatra) March 30, 2023

.@narendramodi_in जी आपके इस भाव और स्नेह से अभिभूत हूँ । आपकी प्रेषित शुभकामनाओं से जीवन में एक नई ऊर्जा एवं उत्साह का संचार हुआ है, उसके लिए हृदय से आपका आभार,धन्यवाद व कृतज्ञता व्यक्त करता हूँ #AcharyaBalkrishna #JadiButiDivas #जड़ीबूटीदिवस pic.twitter.com/mF7H8W8HTC

— Acharya Balkrishna (@Ach_Balkrishna) August 4, 2022

चतुर्वेद पारायण यज्ञ, "पतंजलि संन्यास महोत्सव" ऋषिग्राम हरिद्वार से लाइव- https://t.co/bnytfaqSSc

— स्वामी रामदेव (@yogrishiramdev) March 26, 2023

A misleading open letter about sci-fi AI dangers ignores the real risksAdd extra dose of health and taste to your diet with Patanjali Honey! It is rich in minerals, vitamins and other nutritious elements which can be a perfectly healthy choice for those trying to lose weight. #PatanjaliProducts #Honey pic.twitter.com/qpGolFBYMc

— Patanjali Ayurved (@PypAyurved) April 1, 2023

Pause Giant AI Experiments: An Open Letter

BuzzFeed Is Quietly Publishing Whole AI-Generated Articles, Not Just Quizzes These read like a proof of concept for replacing human writers.

Vinod Khosla on how AI will ‘free humanity from the need to work’ When ChatGPT-maker OpenAI decided to switch from a nonprofit to a private enterprise in 2019, Khosla was the first venture capital investor, jumping at the opportunity to back the company that, as we reported last week, Elon Musk thought was going nowhere at the time. Now it’s the hottest company in the tech industry.

Google and Apple vets raise $17M for Fixie, a large language model startup based in Seattle

This Uncensored Chatbot Shows What Happens When AI Is Programmed To Disregard Human Decency FreedomGPT spews out responses sure to offend both the left and the right. Its makers say that is the point.

Alibaba considers yielding control of some businesses in overhaul

Elon Musk's AI History May Be Behind His Call To Pause Development Musk is no longer involved in OpenAI and is frustrated he doesn’t have his own version of ChatGPT yet. .......... OpenAI was co-founded by Sam Altman, who butted heads with Musk in 2018 when Musk decided he wasn’t happy with OpenAI’s progress. Several large tech companies had been working on artificial intelligence tools behind the scenes for years, with Google making significant headway in the late 2010s.......... Musk worried that OpenAI was running behind Google and reportedly told Altman he wanted to take over the company to accelerate development. But Altman and the board at OpenAI rejected the idea that Musk—already the head of Tesla, The Boring Company and SpaceX—would have control of yet another company......... “Musk, in turn, walked away from the company—and reneged on a massive planned donation. The fallout from that conflict, culminating in the announcement of Musk’s departure on Feb 20, 2018 ........ After Musk left he took his money with him, which forced OpenAI to become a private company in order to successfully raise funds. OpenAI became a for-profit company in March 2019. .......... Some people are utilizing ChatGPT to write code and even start businesses ...... Tesla is working on powerful AI tech. Tesla requires complex software to run its so-called “Full Self-Driving” capability, though it’s still imperfect and has been the subject of numerous safety investigations.......... Tesla is working on powerful AI tech. Tesla requires complex software to run its so-called “Full Self-Driving” capability, though it’s still imperfect and has been the subject of numerous safety investigations......... Musk has had no problem with deploying beta software in Tesla cars that essentially make everyone on the road a beta tester, whether they’ve signed up for it or not. ............ the Future of Life Institute is primarily funded by the Musk Foundation. ......... Musk was perfectly happy with developing artificial intelligence tools at a breakneck speed when he was funding OpenAI. But now that he’s left OpenAI and has seen it become the frontrunner in a race for the most cutting edge tech to change the world, he wants everything to pause for six months. If I were a betting man, I’d say Musk thinks he can push his engineers to release their own advanced AI on a six month timetable. It’s not any more complicated than that. .

A Guy Is Using ChatGPT to Turn $100 Into a Business Making as Much Money as Possible. Here Are the First 4 Steps the AI Chatbot Gave Him. "TLDR I'm about to be rich." ........ "You have $100, and your goal is to turn that into as much money as possible in the shortest time possible, without doing anything illegal," Greathouse Fall wrote, adding that he would be the "human counterpart" and "do everything" that the chatbot instructed him to do. ......... he managed to raise $1,378.84 in funds for his company in just one day ....... The company is now valued at $25,000, according to a tweet by Greathouse Fall. As of Monday, he said that his business had generated $130 in revenue ....... First, ChatGPT suggested that he should buy a website domain name for roughly $10, as well as a site-hosting plan for around $5 per month — amounting to a total cost of $15......... ChatGPT suggested that he should use the remaining $85 in his budget for website and content design. It said that he should focus on a "profitable niche with low competition," listing options like specialty kitchen gadgets and unique pet supplies. He went with eco-friendly products. ......... Step three: "Leverage social media" ....... Once the website was made, ChatGPT suggested that he should share articles and product reviews on social media platforms like Facebook and Instagram, and on online community platforms such as Reddit to engage potential customers and drive website traffic......... asking it for prompts he could feed into the AI image-generator DALL-E 2 ........ he had ChatGPT write the site's first article ........ Next, he followed the chatbot's recommendation to spend $40 of the remaining budget on Facebook and Instagram advertisements to target users interested in sustainability and eco-friendly products........ Step four was to "optimize for search engines" ....... making SEO-friendly blog posts ........ By the end of the first day, he said he secured $500 in investments. ....... his "DMs are flooded" and that he is "not taking any more investors unless the terms are highly favorable." .

DMs are flooded.

— Jackson Greathouse Fall (@jacksonfall) March 16, 2023

Cash on hand: $1,378.84 ($878.84 previous balance + $500 new investment)

The company is currently valued at $25,000, considering the recent $500 investment for 2%.

Not taking any more investors unless the terms are highly favorable.

This week's pod is about how one tweet about ChatGPT changed @jacksonfall's life

— GREG ISENBERG (@gregisenberg) March 23, 2023

- Generated 20m+ impressions

- Went from an unknown designer in Oklahoma City to being on CNN

- Grew from 2k to 100k followers on Twitter in 7 days

- Started a "HustleGPT" movement

- And how he…

It's Day 2, y'all! I've given HustleGPT a formal challenge to get to $100,000 cash on hand as quickly as possible.

— Jackson Greathouse Fall (@jacksonfall) March 16, 2023

Here's what it said it's going to do:

1. Allocate budget to hire content creators for our eco-friendly website

2. Explore dropshipping

3. Develop a SaaS product 🤯 pic.twitter.com/xIl7Ogmtrc

Technologies in history that have proven to be bad for humans.

— Mukund Mohan (@mukund) March 30, 2023

1. Atomic bomb and chemical weapons

2. Cigarettes

3. Thalidomide and DDT

Can’t think of others.

Is AI that bad so as to cause it’s “pause”?

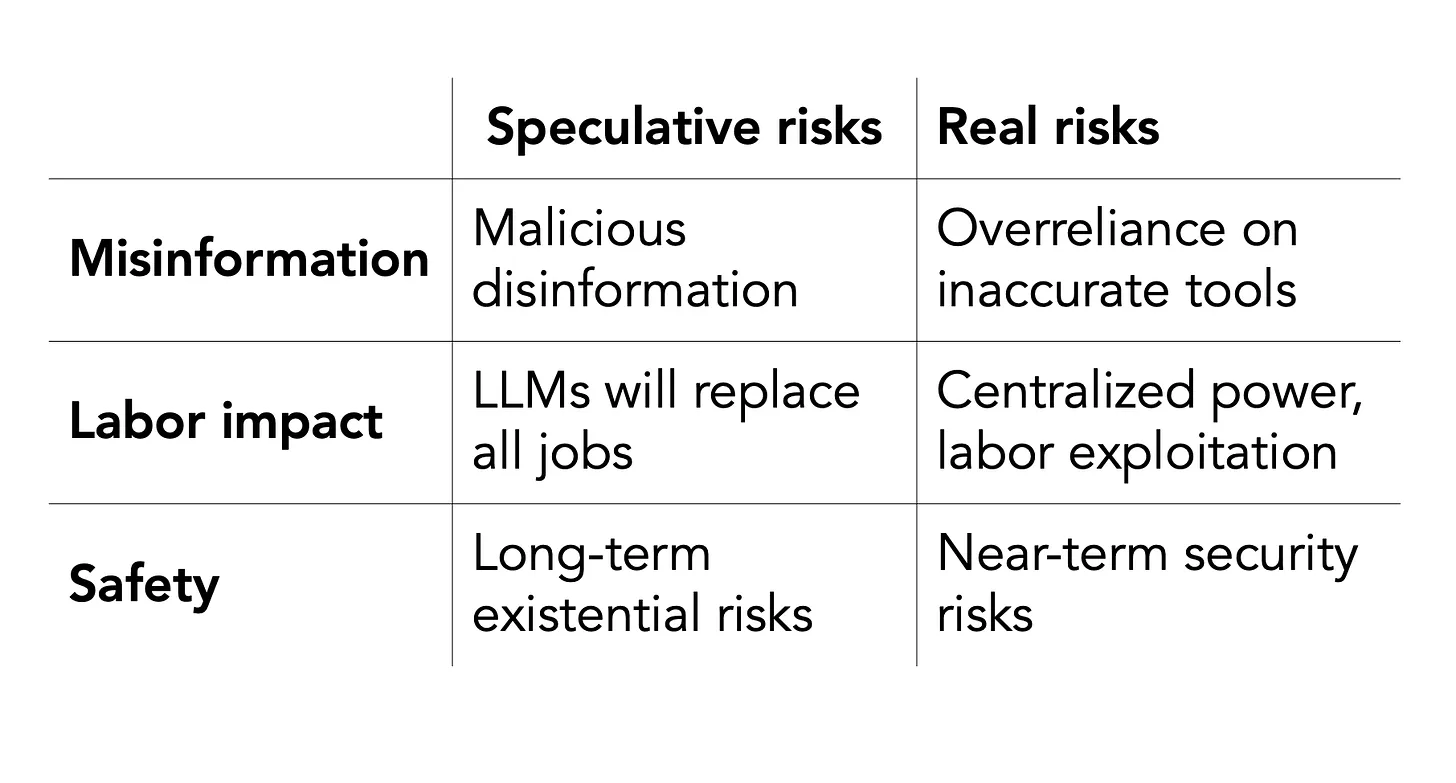

A misleading open letter about sci-fi AI dangers ignores the real risks Misinformation, labor impact, and safety are all risks. But not in the way the letter implies....... We agree that misinformation, impact on labor, and safety are three of the main risks of AI. Unfortunately, in each case, the letter presents a speculative, futuristic risk, ignoring the version of the problem that is already harming people.

Pause Giant AI Experiments: An Open Letter "Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?" ....... creating disinformation is not enough to spread it. Distributing disinformation is the hard part ........... LLMs are not trained to generate the truth; they generate plausible-sounding statements. But users could still rely on LLMs in cases where factual accuracy is important. ......... CNET used an automated tool to draft 77 news articles with financial advice. They later found errors in 41 of the 77 articles.

It's Hard Fork Friday! This week on the show, Sundar Pichai talks to us about the risks and potential of AI — and says the model powering Bard is getting an upgrade next week https://t.co/wXa56NVmQ8

— Casey Newton (@CaseyNewton) March 31, 2023

The @CaseyNewton and @kevinroose over at the @nytimes interviewed @sundarpichai about Bard/AI etc https://t.co/YedORMpNZd

— Barry Schwartz (@rustybrick) March 31, 2023

India is hunting for new spyware with a lower profile than the controversial Pegasus system with rival surveillance software makers preparing bid. https://t.co/qMisAlXd7a

— Yusuf Unjhawala 🇮🇳 (@YusufDFI) March 31, 2023

If you feel like your startup could be doing better remind yourself...

— Andrew Gazdecki (@agazdecki) March 31, 2023

- all the things you're doing right

- all the progress you've made

- all startups go through hard times

- all the small wins you've made

- all the lessons you've learned

Then get back to building.

The problem with starting a themed fund, if you're a VC, is what if the theme wasn't AI?

— Paul Graham (@paulg) March 31, 2023

Made my first ever github commit ✅

— Alex Macdonald (@alexfmac) March 31, 2023

AI is indeed amazing. https://t.co/zhnqkhL10x pic.twitter.com/DaNSnt9ukU

After you.

— Paramendra Kumar Bhagat (@paramendra) March 31, 2023

#GPT4 is able to pass every exam, from art and history to chemistry.#GPT5 will be out by year-end.

— DataChazGPT 🤯 (not a bot) (@DataChaz) March 15, 2023

We're so done. pic.twitter.com/Zk4D2dJOZh

Everyone's job description after GPT-5pic.twitter.com/qW2dpK4wdR

— Liron Shapira (@liron) March 26, 2023

This is how chatgpt-5 will act#ChatGPT #GPT5 #GPT4 pic.twitter.com/Fv8vqH7GPA

— Imtiaz Mamun (@withimtiazmamun) March 25, 2023

Humanity's last Tweet. #ai #robotics #future #gpt4 #GPT5 #chatGPT pic.twitter.com/x60hmCtmeM

— Undetectable.ai (@UndetectableAI) March 24, 2023

GPT-5: can perfectly build any website

— Chris Bakke (@ChrisJBakke) March 24, 2023

GPT-6: can build and run a company

GPT-7: passes Turing test

GPT-8: overthrows world governments

GPT-9: fails to understand how Jira is supposed to work, gives up, asks humans for help

GPTScript - the final nail in the coffin.

— Dan ⚡️ (@d4m1n) March 24, 2023

The programming language for AI. Can write any program or app efficiently without any prior knowledge.

Based on the upcoming GPT-5, this will eliminate the need for developers. pic.twitter.com/MlWrGJNIIy

i have been told that gpt5 is scheduled to complete training this december and that openai expects it to achieve agi.

— Siqi Chen (@blader) March 27, 2023

which means we will all hotly debate as to whether it actually achieves agi.

which means it will.

GPT-5 is rumoured to be trained on 69 quazillion parameters 🤤 pic.twitter.com/HZ4wr1ceNn

— ChatGPT NFT Club (@ChatGPTNFTs) March 23, 2023

GPT-5 is not going to be AGI.

— Harrison Kinsley (@Sentdex) March 29, 2023

It's almost certain that ~no~ GPT model will be AGI.

It's highly unlikely any model optimized using methods we use today (gradient descent) will ever be AGI.

The GPT models coming out will change the world for sure, but the over-hype is wild.

I worry that an unintended side effect of locking down these models is that we are training humans to be mean to AIs and gaslight them in order to bypass the safeties. I am not sure this is good for the humans, or that it will be good for GPT-5. pic.twitter.com/49gh0vvvY9

— Eliezer Yudkowsky (@ESYudkowsky) March 22, 2023

The human instrumentality project will commence now! 🫴🏼 #GPT5 pic.twitter.com/qMhRl3GsLm

— Carlos Davila (@Carlosdavila007) March 30, 2023

i do not know with what data GPT-5 will be trained, but GPT-6 will be trained with sticks and stones

— James Rosen-Birch @provisionalidea@techhub.social (@provisionalidea) March 29, 2023

Rumor is GPT-5 was able to configure and run a Kubernetes cluster, which triggered this call for a pause https://t.co/OVYOjINUZW

— Pramod Gosavi (@ppgosavi) March 30, 2023

Peculiar AI jobs await us? #GPT5https://t.co/oEAuZ6nvPg

— Milad Khademi Nori (@khademinori) March 29, 2023

My biggest issue with the moratorium, if it were to happen?

— Gary Marcus (@GaryMarcus) March 30, 2023

It would mean that I would have to wait *six* extra months to say “I told you so”, when we found out that GPT-5 continued to do stupid stuff like this. pic.twitter.com/qlXfCxZ36W

GPT4: writes your code.

— Benedict Evans (@benedictevans) March 24, 2023

GPT5: passes Turing test.

GPT6: can do Concur.

GPT7: still can’t manage Workday.

😂just wait until #GPT5! #ChatGPT pic.twitter.com/XKJ6adIlsx

— 🇺🇦Evan Kirstel #B2B #TechFluencer (@EvanKirstel) March 30, 2023

GPT5 Next Gen : 1st Upcoming Abilities To Transform AI + The Future of Tech

— Aritra Ghosh (@IamAritraG) March 31, 2023

via / @OpenAI #DataScientists #MachineLearning #digitalhealth #eHealth #innovation #technology #web3 #metaverse #python #smartcities #robots #Robotics #AI #GenerativeAI #ChatGPT #NLP #Analytics #tech… pic.twitter.com/I0PZHHrwOz

Will GPT5 start designing GPT6? https://t.co/GXMDeo3Uwl

— Vinod Khosla (@vkhosla) March 26, 2023

What do YOU think? A lot of people want to know.

— Paramendra Kumar Bhagat (@paramendra) March 31, 2023

GPT-5 uses p-values correctly

— Kareem Carr | Data Scientist (@kareem_carr) March 30, 2023

GPT-6 is a Bayesian

GPT-7 doesn't care. Just wants answers

GPT-8 reinvents machine learning

GPT-9 trains a *larger* language model so it can do data science via prompt engineering

Anyone else seeing this? #chatgpt #gpt5 pic.twitter.com/ctsALvJrv7

— Esco Obong (@escobyte) March 22, 2023

GPT-1: A New Autocomplete Hope

— David Williams (@David_Williams) March 25, 2023

GPT-2: Autocomplete Strikes Back

GPT-3: Return of the Autocomplete

GPT-4: The Autocomplete Menace

GPT-5: Attack of the Autocomplete

GPT-6: Revenge of the Autocomplete

GPT-7: The Autocomplete Awakens

so how does an LLM, even gpt5, get to agi?

— Siqi Chen (@blader) March 27, 2023

don’t you still need to prompt it?

doesn’t it need to like, do stuff, have goals?

well, imagine you do something like this: give it a goal, put it in a loop, with all of the power of chatgpt plugins, and with gpt5 instead: https://t.co/R3lE2LYl5M

OpenAI just launched GPT-4 this month but new reports suggest GPT-5 could arrive before the end of 2023. Here's what we know so far: https://t.co/Jy1jv7qhnD

— Windows Central (@windowscentral) March 31, 2023

Just make sure to say “please” and “thank you” to GPT-5 and you’ll be fine.

— AI Breakfast (@AiBreakfast) March 23, 2023

Goldman Sachs just dropped this report about AI replacing lawyers. Says, 44% legal work can be automated.

— Rossi Dan (@roshandhanai1) March 29, 2023

While Elon Musk calls for 6 months delay in GPT 5 AIdevelopement pic.twitter.com/LK1VnasgWx

ok, we got there in the end. GPT-4 would sign the letter against GPT-5, duh: pic.twitter.com/ByIy3pbNn4

— Alex Gerko (@AlexanderGerko) March 29, 2023

If GPT-5 wants to play a game I suggest you pass pic.twitter.com/UmPfyjRn61

— PhotographicFloridian (@JackLinFLL) March 30, 2023

I asked #BardAI whether it's better than chatGPT and it had this to say... #GPT5 pic.twitter.com/KfAAWXNrhz

— CardzRegard (@CardzRegard) March 29, 2023

Just borrowed my friend’s jacket wdyt?

— Elon Musk (@elonmusk) March 31, 2023

You know we live on a rock in the middle of a billion trillion stars and none of this really matters right?

— Chad Hurley (@Chad_Hurley) March 31, 2023

The moon is just the right size, at just the right distance to make earth stable enough for life. How delicate! And precious. The universe is vast so you may do the math on life on earth.

— Paramendra Kumar Bhagat (@paramendra) March 31, 2023

“No one cared. No reporter even thought YC was interesting. They wouldn’t even call me back. But we just kept moving forward, little by little, not caring what people thought about us.”@jesslivingston on co-founding @ycombinator

— Justin Gordon (@justingordon212) April 1, 2023

Great reminder for founders in the early days.

I've said this a lot and will say it again: SUGAR IS POISON. Take the proof from @drmarkhyman himself. https://t.co/EJUkqliL9S pic.twitter.com/wcz0EmCsJA

— Peter H. Diamandis, MD (@PeterDiamandis) April 1, 2023

One difference between worry about AI and worry about other kinds of technologies (e.g. nuclear power, vaccines) is that people who understand it well worry more, on average, than people who don't. That difference is worth paying attention to.

— Paul Graham (@paulg) April 1, 2023

Most of the recommendation algorithm will be made open source today. The rest will follow.

— Elon Musk (@elonmusk) March 31, 2023

Acid test is that independent third parties should be able to determine, with reasonable accuracy, what will probably be shown to users.

No doubt, many embarrassing issues will be… https://t.co/41U4oexIev

This is a time for iteration AND innovation.

— Brian Solis (@briansolis) March 31, 2023

What's the difference?

Iteration is the improvement over something that exists. It's doing things better, faster, more efficiently, at scale...

Innovation is doing new things that create new value.

Disruption is doing new things… pic.twitter.com/Ca3fhfAebs

Twitter recommendation source code now available to all on GitHub https://t.co/9ozsyZANwa

— Elon Musk (@elonmusk) March 31, 2023

Twitter open source Spaces discussion happening now!

— Elon Musk (@elonmusk) March 31, 2023

https://t.co/VpS6frbOhT

GPT-4 for curiosity-led exploration of a concept: https://t.co/9XHQdQKFbc

— Greg Brockman (@gdb) March 31, 2023

Who do you trust most right now?

— @jason (@Jason) March 31, 2023

Explain your answer with a reply.

— Paramendra Kumar Bhagat (@paramendra) April 1, 2023

— Elon Musk (@elonmusk) March 31, 2023

The Pope wore it better. pic.twitter.com/5hz3Xd2PE2

— Rocío GB (@chiogb66) March 31, 2023

Sundar https://t.co/DUC0McwDJZ @sundarpichai @Google @GoogleAI

— Paramendra Kumar Bhagat (@paramendra) April 1, 2023

While acknowledging that Bard has its weaknesses, Pichai claimed that an injection of raw power was imminent. $GOOGLhttps://t.co/gIMbdPU4dt

— Mukund Mohan (@mukund) April 1, 2023